2016 was the year that speculation about the future of artificial intelligence reached a fever pitch, and in 2017 so far, the hype heatwave shows no signs of cooling. Common wisdom is that academic researchers and tech companies are right on the verge of creating super-intelligent algorithms that will automate any task a human can perform. After all, if computers can beat humans in a game as complex as Go, what can’t they do?

But when you look at the universe of machine learning and AI tools that are actually on the market in 2017, there’s a bit of a let-down. The fact is-outside of a relatively small number of (mostly perception-related) problems-AI applications to date don’t make wide use of deep learning or anything else particularly “advanced” compared to the state-of-the-art in academic research. Instead, most of the core technology underlying these products is enabled by advances in traditional hardware and software engineering, not super-human robots-which hardly seems like magic compared to what we’ve been promised.

Why is there such a gulf between the AI hype and actual products on the market?

State of Artificial Intelligence in 2017

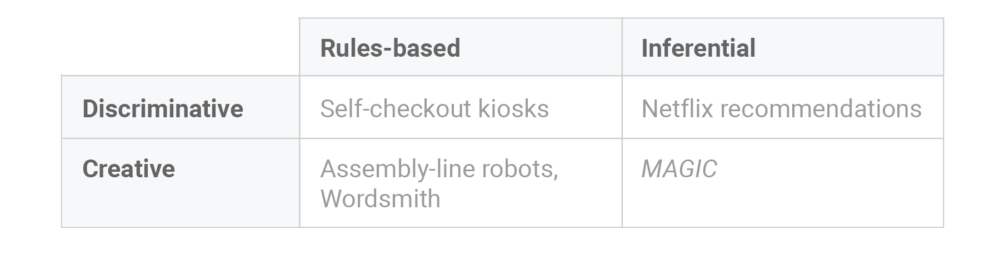

To better understand the AI landscape in 2017, it’s useful to think about particular AI solutions along two axes: whether an algorithm is rules-based or inferential, and whether it is discriminative or creative.

Rules-based AI has existed for decades, and typically involves a programmer defining (by hand) a set rules that define the system’s behavior given specific conditions in an “if-then” fashion. Rules-based AI tends to shine on problems where simple heuristics are preferable to statistical models, either because available training data is expensive to obtain, or because deterministic results are valued over achieving the highest possible accuracy. Assembly line robots are a great example of rules-based AI-manufacturers already know how to efficiently assemble cars, so it makes more sense to hard-code the rules for assembling pieces of a car into the robots than it is to design robots that could learn those rules on their own.

Automated Insights’ product, Wordsmith, is another example of rules-based AI-it provides an interface for creating rules that determine what words, phrases, or sentences appear in output narratives given conditions in a dataset. Much like a robot on an assembly line saves time by quickly and accurately performing the tasks it’s programmed to do, a well-designed Wordsmith project generates data-driven prose according to a specific set of instructions provided by the user. The result is reactive, personalized content on a scale much larger than what can be achieved with human writers alone.

Inferential AI (i.e. machine learning), on the other hand, “learns” a set of rules directly from existing data, often with the help of statistics and mathematical optimization. Machine learning has seen a surge in attention in the last decade, and many people are familiar with some of the more spectacular examples like Netflix movie recommendations and Amazon Echo’s voice recognition. Inferential AI tends to be useful in cases where problems can be reasonably constrained, and large datasets of examples can be cheaply obtained. By studying the behavior of millions of monthly users, for example, Netflix’s machine learning engineers can design systems that provide recommendations based on insight automatically extracted from historical data.

In parallel to the rules-based versus inferential categorization of AI, it’s also useful to contrast discriminative AI solutions with creative AI solutions. The goal of discriminative AI is straight-forward: to make a decision with the highest possible accuracy (“is there a cat in this picture?”, “whose faces appear in this video?”, “what words were spoken in the previous utterance?”).

In contrast, creative AI systems like autonomous cars and chatbots use the decisions made by discriminative systems to produce a tangible result. Because the performance of creative systems relies on stitching together the results of many decisions, they tend to be much more difficult to design and build than discriminative systems. The tricky part of designing an autonomous car, for example, is not only building systems that can successfully identify whether the next light is red, yellow, or green and whether it is safe to merge into an adjacent lane. The car must also translate all of these decisions into a trajectory that obeys the rules of the road and is respectful to other drivers.

Creative-inferential AI is magic

Creative-inferential AI-systems that “learn” without direct human design and produce actions that feel natural to humans-are the holy grail for researchers and product engineers. Their value is immediately clear-improving the speed, safety, and reliability of repetitive or resource-intensive work has been a staple of technological progress for hundreds of years, and there’s no doubt that advancements that made possible the widespread use of creative-inferential algorithms would have a tremendous impact on the world. Many professions, from low wage jobs like farm work and fast food preparation to highly skilled industries like healthcare and education, have barely changed since the 1970s, and are areas ripe for the productivity gains promised by truly useful AI.

Clark’s third law that “any sufficiently advanced technology is indistinguishable from magic” has a corollary in AI research-any sufficiently advanced improvement in AI is indistinguishable from intelligence. By this definition, creative-inferential AI is squarely within “magic” territory-the idea of self-learning algorithms that interact seamlessly with humans is a classic science fiction trope, and if you believe the hype, will be possible in a huge variety of applications in the near future.

Magic is harder than you think, and you probably don’t want it anyway

The problem with the expectations around AI, is that much of this optimism about the near-term promise of creative-inferential AI is unfounded. Researchers’ opinions on the near-term promise of AI are decidedly mixed between optimism and pessimism, but there is no question that major breakthroughs are still needed before these systems become widely available.

Perhaps the most successful creative-inferential AI system currently in production is Google’s recently revamped translate service that now relies entirely on deep neural networks. Translate’s success represented a huge accomplishment in AI productization, but it hardly heralds a brave new world of thinking robots; despite significant improvements, the Translate service still performs well below the level of human translators. Nonetheless, users tend to be quite forgiving of small mis-translations as long as they can understand the gist of the content, so the system doesn’t have to be perfect in order to be useful.

Users are much less forgiving of sub-human performance in other applications, however, and in these cases, the effects of imperfect creative-inferential AI can quickly ruin the user experience. Chatbots are a great example-despite all of the recent hype, a common joke in the chatbot developer community illustrates the difficulty of building natural language user interfaces that provide a user experience as fluid as touch and click-based UIs. And as Microsoft Research’s experiment with a Twitter bot named Tay demonstrated, current-gen AI is no match for malicious or adversarial users.

Love this! #Bots #swag #AI @ChatbotConf pic.twitter.com/UDCG7DzjNn

— Amir Shevat (@ashevat) October 13, 2016

The lesson from the disappointing (and sometimes disastrous) results in these early systems is that the relationship between error rate and usefulness is complicated for creative-inferential AI. In discriminative systems, the rate at which the system makes errors corresponds directly to how useful the system is. But in creative systems, the number of errors isn’t the only important metric-the ways in which the system fails can magnify or reduce the effect of those errors. If an autonomous car responds to a miscalculation by swerving quickly off of the road, for example, the outcome to the passenger would be much different that if the car slowly pulled to a stop in a safe location.

From language models to automatic photo captions, failure cases in creative-inferential models today are incredibly unpredictable. In toy demos, these failures are humorous, but in a commercial product, they would be completely unacceptable. Expecting perfection from these systems is unrealistic, but even if overall performance reaches an acceptable threshold, the non-deterministic behavior inherent to creative-inferential AI can lead to frustrating user experiences. While testing experimental features for Android, for example, Google engineers discovered that predictively rearranging a user’s apps led to a frustrating experience-even with a system that could predict a user’s next action with 99% accuracy, muscle memory for the app menu was more important to user experience than overall efficiency.

In a related (and equally complex) problem, introspection is another huge hurdle for creative-inferential systems. Many complex inferential AI systems (deep neural networks especially) are extremely difficult to interrogate when they make a mistake, making the route to better performance unclear. If a user spots an error in the output of a rules-based system, she knows immediately where to search in her code to correct the error. With a neural network-based approach, however, that error simply becomes another training point fed into subsequent training iterations. There is no promise that the mistake would be fixed, or that fixing that mistake wouldn’t cause other mistakes to occur.

This isn’t to say that limited applications of more advanced creative-inferential AI are impossible. Personal assistants like Siri and Alexa will continue to improve, some form of autonomous vehicles will be productized, and more robots will become available to consumers. Nonetheless, the twin problems of non-deterministic behavior and debugging complexity are huge challenges that have seen little progress despite the recent flourish of excitement around AI. Until major advancements make “magical” creative-inferential systems more reliable, it is unclear whether new AI research will be fully realized outside of academia. And until then, any far-fetched claims of magical AI completely transforming the economy, replacing all human work, and ushering in the robot apocalypse are just smoke and mirrors.